To build the Web applications I use mostly Python. An year ago I started learning Go, mainly for fun. In the meantime it turned out that I have to rewrite some old CGI application written in C, which have worked with thttpd server in chroot mode. I started searching for a tool with which I could write a standalone web application with embedded web server, easy to chroot. At the same time I started play with web.go framework, mustache.go templates, Go native http package and GoMySQL database API. I found that the Go with http, mustache.go and GoMySQL packages would be an ideal tools to do my job. So I decided to try write my application in Go.

During work it appeared that I need something more flexible than mustache.go and something more mature and bug-free than GoMySQL. Finally, I wrote my application using Kasia.go templates and MyMySQL (I wrote these packages specifically for my application but decided to make it available for Go community). Rewritten application works very well in production, even under much greater load than the previous one. I began to wonder about how faster (or maybe slower) is Go than Python for implementing standalone Web applications. I decided to do several tests, taking into account the use of different frameworks and servers. For comparison, I picked up following Go packages:

raw Go http package,

web.go framework (it uses http package to run in standalone mode),

twister framework with its own HTTP server,

and following Python Web servers/frameworks:

web.py framework with gunicorn WSGI server,

web.py framework with gevent WSGI server,

web.py framework using flup FastCGI to be a backend process for nginx server,

tornado asynchronous server/framework,

tornado with nginx as a load balancer.

For each case I wrote a simple application, slightly more complicated that typical Hello World example. Any application consist of:

parsing parameters in URL path using regular expressions,

using for statement to create multiple line output,

using printf-like formatting function/expression to format output.

I think that these operations are common in any Web application, so should be included in any simple benchmark test. Below I put links to the source code for all test applications:

Testing environment

The test environment consisted of two PCs (traffic generator and application server) connected using direct GigabitEthernet link.

Generator: 2 x Xeon 2.6 GHz with hyperthreading, Debian SID, kernel: 2.6.33.7.2-rt30-1-686 #1 SMP PREEMPT RT;

Server: MSI Netbook with two core Intel U4100 1.30GHz, AC power connected, 64-bit Ubuntu 10.10, kernel: 2.6.35-25-generic #44-Ubuntu SMP, Python 2.6.6-2ubuntu2, web.py 0.34-2, flup 1.0.2-1, tornado 0.2-1, gunicorn 0.10.0-1, gevent 0.13.0-1, nginx 0.7.67-3ubuntu1 (config);

To generate HTTP traffic and measure the performance of any test application I used siege benchmarking utility. Siege can simulate multiple users using multiple threads. I use the following command to generate traffic:

siege -c 200 -t 20s http://ADDRESS:PORT/Hello/100

or multiple such commands with properly reduced -c parameter (in case when I run multiple Python applications simultaneously). It simulates traffic generated by 200 users and works for 20s. With given URL Web application produces 100 lines output for any request. Go applications was compiled using Go release.2011-02-01.1

Results

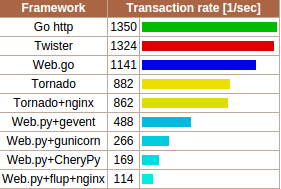

GOMAXPROCS=1, one Python process:

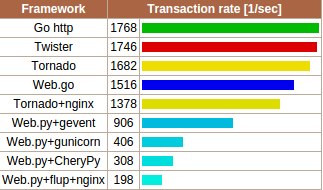

GOMAXPROCS=2, two Python processes working simultaneously:

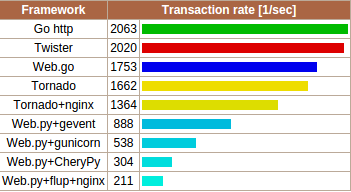

GOMAXPROCS=4, four Python processes working simultaneously:

Web.py+flup+nginx has worked with the following flup server options: multiplexed=False, multithreaded=False. If multiplexed=True it has worked slightly slower. Setting multithreaded=True doesn't improve performance and when there is only one process running the nginx server reports such errors:

[error] 18166#0: *66139 connect() to unix:/tmp/socket failed (11: Resource temporarily unavailable) while connecting to upstream

Multiple FastCGI processes were spawned using spawn-fcgi.

Conclusion

As you can see Go wins in almost all test cases. Worse results of web.go framework is probably due to the fact that it try find static file for given URL before run a handler function. It surprised me the very high performance of tornado framework. I was also surprised, that CherryPy server is faster than nginx+flup (I use web.py+flup+nginx for almost all my Python Web applications).

有疑问加站长微信联系(非本文作者)